Introduction

The study of Phonology began in the nineteenth century (Lucid, 2008, p.1). This was a period in history when attempts were made by missionaries to codify some languages—such as native African and Asian—in order to spread the Gospel. Some of these languages varied significantly from the European languages—in terms of speech and diction—and this made the task even harder.

When the study of Phonology began, there were very few languages that had already been written. The first one is Greek, where most letters of the English alphabet have been derived (Kager, 1999). It is considered one of the ancient languages because Greeks were amongst the first people in the world to write. The others are the Egyptians and Chinese. Most alphabets and numerals used in modern writing have been derived from these ancient languages.

The essence of any language is to communicate an idea. The representation of speech in writing before the eighteenth century paid little attention to dynamics of speech such as morphemes, tone and pronunciation. The ancient languages were mostly written in the form of pictographs or numerals. The writers understood what the pictures and symbols meant. However, as more people adapted the culture of writing in their respective countries, the art of writing developed into a study. It is this development that led to the study of Phonology.

Today, all languages known to man have been codified. The advancement in technology and adaptation of the modern lifestyle are some of the factors that have led to this phenomenon. The rates of literacy are much higher than they were back in the eighteenth century. Nowadays, any literate person can comfortably write his or her native language. In addition, it is very common for people to study other languages other than their own.

The point of concern in this paper is the accuracy of the codification of a particular language. This question relates to how well the actual speech has been reduced to writing and the rules that guided the codification.

Different scholars have had varying views concerning the rules guiding the writing of a particular language. These rules concern the basics of pronunciation and sound; and how well these have been captured in the written language. These differing sentiments have given rise to different theories—each theory dictating its own rules of writing. Initially when the study of Phonology began, scholars mainly concentrated on how sound is produced and how the same could be represented in the written speech. The writers of the nineteenth century advanced the study of Phonology to include the dynamics of language such as the phonetics of articulation and acoustics.

It was during the late nineteenth century that the various theories of Phonology began. The aim of each theory was to qualify a previous and proffer an alternative solution. Whereas this has advanced the study of Phonology, it has also created a number of rules of writing. The multiplicity of these theories has made it difficult to determine the most plausible one.

The reason why there are so many theories is due to the uniqueness of every language. The utterances, intonation and tonal variation of one language vary from one language to another. In addition, some languages were originally written by people who were not native speakers of the language. This resulted in the omission of crucial sounds that formed the basis of speech in the language. In some cases, some sounds were misrepresented in writing. The writer may have applied a theory of Phonology that was inappropriate for the language or he may have lacked a particular knowledge when he was codifying the language.

The first of these theories is the Contrastive Analysis Hypothesis Theory which is now considered dead due to lack of usage (Keys, 2002, P.4). It suggests that by contrasting a first person’s language with a second one that they intend to study, it would be possible to tell the areas in the second language where the person would have difficulty in studying.

This theory was rejected after being used for some time because it was found to be inefficient. Unfortunately, as far as some languages were concerned, the theory had already been applied during their codification. As a result, the written works varied greatly from the actual delivery of speech: some utterances were not catered for, tonal variation was absolutely left out and similar words and pronunciations could not be differentiated or were completely left out. As the scholars were only focused on sound production, these other aspects of the language were not incorporated.

After the natives of the languages became literate and realized this mistake, the differing theories of Phonology began. They sought to come up with other theories that were more appropriate in codifying the language. They criticized the inadequacies of the former theory and replaced them with some of their own making. In some instances, the critics were not native speakers of the language but students of Phonology. More theories came up and added to the many others that already existed. Nothing was done at the grassroots.

This study seeks to answer the question whether these differences can be set aside. The paper attempts to reconcile the various theories of Phonology and determine whether there can be one theory that caters for the codification of all languages. It examines the reactions of Phonology to the rapid advancement in technology and the study of language. Lastly this paper analyses the possibility of the adaptation of the theory of Metric Phonology as an answer to the question of which rule is to be applied in the codification of any language.

Assumptions

All languages have certain aspects that are similar. These are known as universals. For example, all languages have vowels and consonants. It does not matter how the alphabets are represented—whether symbols or letters. The fact remains that there has to be a difference between vowels and consonants; even if they are known by different names. This presumption applies even where vowels of a particular language exceed the consonants.

Another assumption made in this study is that all languages can be written. It does not matter whether the true position on the ground is that they have not yet been codified. The general presumption is that as long as the language can be spoken, then it can be reduced to writing. Secondly, it is immaterial whether the language has been discovered or not; or whether the native speakers are literate.

The third assumption is that any language has syllables. The study of Phonology would be impossible if there were no syllables in a language. Therefore, the mention of the word language in this study is made with the presumption that the language referred to has syllables.

The fourth presumption of this study is that there are a minimum of two voiceless velar plosives for every language. This refers to one particular consonant sound that is used in numerous languages. The most common voiceless plosive in all the languages spoken in the world is the letter /k/. Therefore, this paper will presume that all languages have at least two voiceless plosives and one of them shall be the letter /k/.

Most languages apply the use of sonorant in speech. In this study, this presumption is made for the majority of languages. The study also recognizes that there are some languages—such as the Mongolian ones—that are completely devoid of the sound.

Another presumption that shall be made in this paper is that every language has its fair share of complex and simple sounds. The assumption is that the complex sounds appear less frequently than the simple ones. This presumption also puts into consideration the fact that a language with complex sounds also contains other sounds—although few—that are simple.

Presentation of Data and Analysis

Presentation of Data

This section examines the details of the theories that have been propounded in Phonology. Keys (2002) talks of the theory of Glossematic and Stratificational Phonology that was initially proposed by Hjelmselv and Uldall. It refers to Phonology as phonematics. The idea behind this theory is that the phoneme ought to be defined by the purpose it serves in a particular language and not by other tangible or mental influence. Here, sound is classified and categorized based on how it alters and how it is distributed within speech. This theory developed the study of Phonology through the acceptance of certain sounds that represented particular utterances; although their very nature would render them to be pronounced otherwise. For example:

- / e:/ for /ea/;

- /n/ for /ng/.

These sounds are found in the French and Danish languages respectively (Leather, 1986).

The beauty about this theory is that it can be applied in languages that have sounds which cannot be catered for through the combination of the available alphabets. For instance, the English alphabet has only 26 letters and the combination of the consonants and vowels, the vowels themselves or the consonants alone can represent sounds in the English language. But what happens when the English alphabet is adapted to a language where these combinations would not bring out the expected sound? The use of stress would be sufficient to mark the various degrees of prominence of sound in speech but it will not make up for the missing sound. Thus the Glossematic and Stratificational Theory could be adapted in these circumstances.

It is worth noting that this theory is no longer in use. It has been overtaken by time due to the formation of other theories of Phonology and the various advancements in the area of study. For private purposes, however, it can still apply if supported by persuasive arguments in regard to the same.

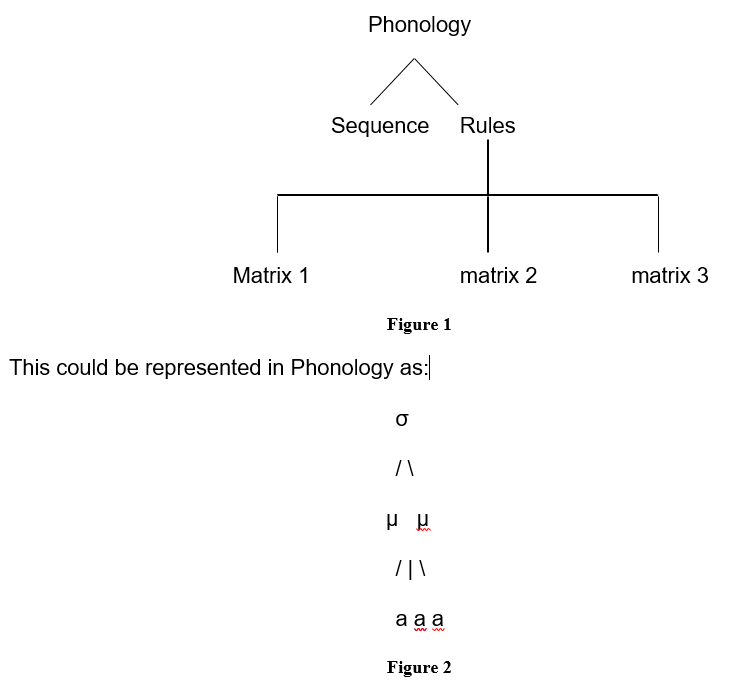

The theory of Orthodox Generative Phonology is classified as one of the most modern theories in the area of Phonology. It is based on psychological ideology and encompasses a hierarchical model. The theory views Phonology as a syntax that is coupled with grammatical sequences. The grammatical sequences only make sense if incorporated with a set of lexical rules. Together these two modify the speech by introducing other sounds that form the foundation of the original sound.

The result is a whole new pronunciation that is derived from the basic structure of the original sound. However, for this structure to work, other feature matrices must feed the lexical rule (Anderson, 1985). Thus, the model would appear as follows:

The problem with this theory is that it appears too complicated. However, when understood it makes sense and can be used in the writing of language; to develop sound in order to represent a particular utterance using a given set of alphabets. Words that do not belong to the original alphabet can be formulated and others that already exist can be re-invented in order to represent the actual speech.

This theory can accommodate the use of stress for various purposes—emphasis and to denote quantity. Although the use of stress emanates from another theory, it could be combined with the theory of Orthodox Generative Phonology so as to make the codification of language simpler. For example, stress can be used on a particular sound in order to emphasize it. Since the sound already originates from a foundational phoneme, the resulting word—the one referred to as the surface surface structure—can be differentiated from its original through the use of stress.

The theory of Metrical Phonology is premised on the idea of stress. It is likened to the general study Phonology that began with the examination of the production of sounds and now incorporates much more than the sound itself. This theory began with the analysis of stress in sound production in speech and now deals with far much more than this. It is the adaptation of rules on words and phrases in such a way that the dominance of a single phoneme is defined in relation to the others within the same word or phrase (Hayes, 1995). Before the development of this theory, the previous schools of thought had used the prosodic word as the distinctive characteristic that applied to basic phonemes.

This theory has been developed over the years to develop a number of rules that guide the use of stress in Phonology. For example:

- The application of stress can be used to determine various limitations of sound. It determines the degree of prominence of a syllable in a sentence (Williams, 1982).

- Every word must have at least one stress symbol: [‘] or [,]

- Stress could have a rhythmic attribute such as in:

- Apalachicola.

- Califragilistic.

- Stress may also be used to denote quantity.

These are just but examples of the use of stress in Phonology. The Metrical Phonology theory is the most widely accepted theory in Phonology due to its simplicity. It is easy to comprehend and as far as sounds are concerned and it is also user-friendly as it simplifies reading and pronunciation. It also simplifies the writing of ancient native languages. The application of the stress mark can also be used in place of missing syllables in sound. This makes the theory adaptable for languages that have sounds which cannot be expressed with a given set of alphabets.

Analysis of Data

The theories that have been discussed above have proffered a number of rules regarding the use of Phonology in speech. A good rule is one that codifies a particular language in such a way that the actual speech is well represented in writing. Some theories of Phonology are no longer in use and this begs the question whether the ones being applied offer the best rules in the codification of language or in the rectification of some language that had already been written.

It is clear that there are major issues relating to the codification of ancient languages, especially where the same had been initially codified by a secondary speaker. What began as a mere study of the production of sound has now become a complex area of study that goes far beyond sound production and tonal variation. This has led to a number of theories that have given rise to rules regarding the codification of sound (Wiese 1996). These rules were formulated in the aforementioned theories and it is difficult to determine which one is to be followed.

Perhaps the staring point of codifying these languages is to determine the appropriate set of alphabets to use: English, Egyptian, Chinese or Greek. The importance of this is that every language is unique in speech and will consequently be unique in writing. Some have more consonants than others. Some languages have many vowels while numerous others have syllables that are simply unique to the language that is being written. Identifying the appropriate set of alphabets to use will do away with the need for the creation of new syllables and it will also adapt the language to the universal rules of writing.

The only problem with this step is where the language had previously been codified without bearing in mind the special features of the language—pronunciation and tone. The issue arises when there is a recommendation for the adaptation of a whole different set of alphabets to correct the mistakes of the initial codification. This could compromise the written validity of the whole language. Not only that but other sectors that rely on the use of the language could suffer a huge communication blow before the new set of alphabets can be studied, mastered and applied in the day to day business.

An alternative solution lies in the introduction of new syllables by combining those which already exist in the alphabet so that the combination can represent the sounds that have been left out. For example, the Swahili language has adapted the English alphabet as far as writing is concerned. However, the Swahili language itself had adapted a number of words—and subsequently sounds—from Arabic. When the language was first codified by the European missionaries, there were a number of pronunciations that existed in Swahili that were left out. For instance:

- /kh/ exists in Swahili but not English.

- /dh/ is the making of both Arabic and Swahili.

- /dz/ is an adaptation of a syllable from a native language into the Swahili language.

This anomaly was discovered in the late nineties and recommendations were made—and subsequently adapted—to accommodate the changes in the language. As matters now stand, these changes have been incorporated into the Swahili language and have gained universal recognition.

Therefore it is possible to reconcile the differences between the speech of a language that had been inaccurately reduced to writing and the actual language itself. This, however, does have its limitations. It is true that there are universal rules relating to language—these were looked at under the second part of the paper. It is safe to conclude that these rules exist automatically as of the time the language is spoken. Therefore, as far as the universal rules of writing language are concerned, the language remains valid as it was first codified.

The problem comes in where that language has to be written down. Whose theories should be applied and to what extent? The best solution to this problem again relates to the choice of alphabets. Every alphabet has its own rule of application. However, this goes only as far as the original language is concerned. For example, the Greek alphabet and its rules of application only apply as far as the language is concerned. The rules change when another language adapts the set of alphabets. The reason is that it is the language that dictates the rules of speech; or could it be another question of the chicken and the egg—which came first?

A closer examination of the codification of language shows that it is the oral language that dictates the rules. This can be looked at from two angles. Firstly, oral communication has been in existence since time immemorial. Man began to talk before he could write. The creators of the alphabets simply came up with symbols to represent sound and then proceeded to determine how the speech shall be represented in written form.

The second perception is from the point of view of the individual language. Suppose the writer was to come up with his or her own set of alphabets and then proceed to codify the language. Upon what basis would the writer differentiate the alphabets? The answer has to be pronunciation because it is only through uttering the sounds that the differences come out. Otherwise the letters would constitute empty marks on paper without having any meaning attached to them.

Once the alphabet and sounds have been defined then the writing can begin.

Conclusion

The theories of Phonology remain relevant in the world today even if some of them may have become redundant due to minimal usage. There are very many languages today and due to the peculiar characteristic of every language, one of these theories could be deployed in the codification of a particular language. Some theories can be combined in order to create a sound in the writing of language. Restricting oneself to the rules of one theory may adversely affect the language being written down through the omission of relevant sounds or the inclusion of sounds that do not belong to the actual speech as far as the language is concerned.

The reconciliation of ancient languages and the theories of Phonology is highly possible. This can be looked at from two points of view: universal application of the rules of language and the peculiar rules defining the language itself. Universal rules exist automatically upon the creation of the language whereas the specific rules are made in accordance with the unique aspects of the language.

The theory of Metrical Phonology is the most accepted theory due to its simplicity. The use of stress marks to emphasize certain aspects of sound makes the language being written to conform to its actual utterance in speech. The rules of stress that have been laid down in this theory have developed the study of Phonology and reduced the difficulty of representing sounds of a language that would otherwise not have existed if a certain set of alphabets were used. The theory can still be combined with others in the writing of language so as to cater for all aspects of speech in writing.

References

Anderson, S. (1985). Phonology in the Twentieth Century: Theories of Rules and Theories of Representation. Chicago, US: Chicago University Press.

Hayes, B. (1995). Metrical Stress Theory. Chicago, US: Chicago University Press.

Kager, R. (1999). Optimality Theory: A Textbook. Cambridge, UK: Cambridge University Press.

Key, K. (2002). Interlanguage Phonology: Theory and Empirical Data. Web.

Leather, J. (1986). Sound Patterns in Second Language Acquisition. Dordrecht, Holland: Foris Press.

Lucid, C. (2008). Latest Developments on Phonetics and Phonology. Web.

Wiese, R. (2000). The Phonology of German. Cambridge, London: University of Cambridge.

Williams, B. (1982). The Problem of Stress in Welsh. Cambridge, London: University of Cambridge.